We Ignore Scientific Literacy at Our Peril

The Christian Science Monitor

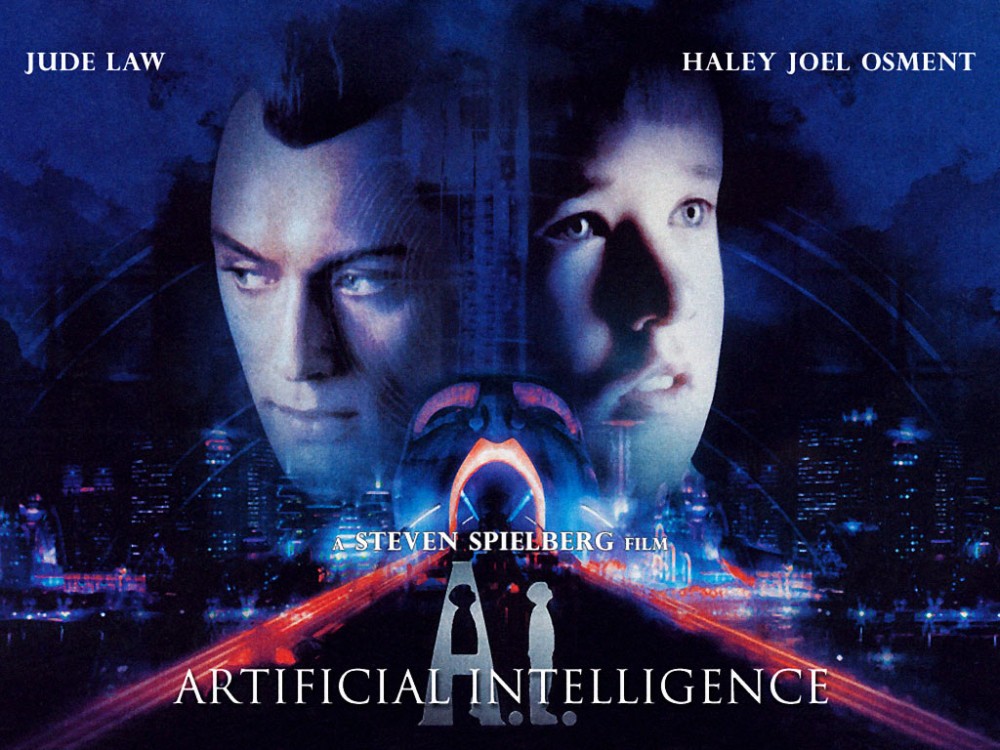

About two-thirds of the way through AI, Steven Spielberg’s latest film, my mind began to wander. I remembered standing at a podium in a vast hotel ballroom in Washington D.C. several months before. I was speaking to the annual convention of the Energy Bar Association, a professional group of lawyers who work within the oil, gas, and electricity industries in the United States.

I had just finished a lunchtime address about how we are creating problems for ourselves – from global warming to antibiotic resistant diseases – that increasingly outstrip our ability to supply effective solutions. As the lawyers eagerly polished off their desserts, I argued that the California energy crisis was an example of such an ingenuity gap.

Then it was time for questions. A dapper, middle-aged man came to one of the microphones. Referring to Ray Kurzweil’s latest book, The Age of Spiritual Machines, he questioned whether I was right to suggest that humankind might not be smart enough to solve its critical problems. Kurzweil, he pointed out, writes that we will soon be able to connect our brains directly to computers. “Won’t that allow us,” the questioner went on, “to expand our thinking power infinitely, so that we can solve any possible problem that we face?”

I was both delighted and appalled by this question. I was delighted, because the question was truly “out of the box.” This dapper lawyer had clearly understood the point of my presentation, and he had made a huge, speculative, and intriguing leap to a possible solution. But I was appalled, too, because though he was obviously very smart – clearly a member of America’s intellectual elite – he didn’t realize that there’s a vast gulf between Kurzweil’s speculation and today’s technological reality.

We’re still in the dark ages when it comes to understanding how the human brain works. We know it’s an assemblage of hundreds of billions of intricately entangled neurons, and we have some general understanding of its structure and anatomy. But we’re just beginning to understand how to classify these neurons, how they are linked across different sections of the brain, and how they communicate with each other through a wide array of neurochemicals. We know even less about how this assemblage of neurons actually processes the information and makes the decisions that are commonplace in our everyday lives. And when it comes to how the brain has feelings, an artistic sensibility, a concept of spirituality, and, above all, a capacity for consciousness – these matters are still best left to philosophers, because brain science has almost nothing to say about them.

The human brain is the most complex structure in the known universe, and it will be a long, long time – probably many decades, if not a century or more – before we understand it well enough to link our heads directly to external computers.

But such silly ideas persist, even among highly educated people. Spielberg’s movie, AI, is full of them. And in all the commentary surrounding the movie, few people have pointed out just how scientifically ill-informed and ludicrous its vision of the future is. Even a rudimentary review of what we already know about the human brain, and what we know about our ability to replicate the brain’s abilities with computers, shows that the world Spielberg depicts will almost certainly not come to pass.

We are still far from getting computers to do something as seemingly simple as understand this sentence. By the year 2001, we were supposed to have HAL in our midst. This renegade computer was the unquestioned villain of Stanley Kubrick’s wonderful 1968 movie 2001: A Space Odyssey (Kubrick, of course, created the original idea and plot-line behind AI). Those of us who saw 2001 when it first came out found HAL’s abilities entirely plausible. In fact, we didn’t give the most basic of those abilities – the ability to converse in natural language – much thought at all. In 1968, it was taken for granted that computers would soon be able to talk. To the extent that we doubted HAL’s plausibility, we focused on its scheming, malicious behavior, and asked if a human-created machine could develop such independence of will and become so wicked.

But it turns out that grasping the meaning of even simple statements in natural language is a far more complex process than most experts initially imagined. We bring an immense store of beliefs, assumptions, and common-sense knowledge about the world to any statement we hear or read, and we are also influenced by many other factors, including the context of that statement and the speaker’s or writer’s intentions. Artificial intelligence, to date, hasn’t come remotely close to the fulfilling its original proponents’ dreams. In fact, it hasn’t even left the starting gate. Some researchers are currently trying to replicate the intelligent behavior of very simple animals, like insects, and they’re having trouble achieving even this limited goal. Key cognitive operations that we carry out without much conscious thought – such as coordinated movement in three-dimensional space and planning and organizing complex sequential tasks (for example, cooking a meal) – are far beyond current AI hardware and software.

But the biggest mistake in the movie AI is its decisive separation of emotion from reason. The whole plot of the movie hinges on the idea that the first generation of robots with artificial intelligence will be eminently rational, but not able to express or act on emotions like love and hate. Once artificial intelligence exhibits emotions, the movie suggests, it will be far harder for flesh-and-blood human beings to treat robots as mere machines and not endow them with some moral status, as real people, in our societies.

Perhaps. The problem with this idea however, is that our emotional capacity is central to our intelligence. Recent brain research shows that it is precisely our ability to integrate emotion with rationality that endows us with our extraordinary intellectual creativity and flexibility. Among other things, our emotions allow us to see ourselves as entities with continuity from the past, through the present, and into the future, and this ability allows us to plan for the future.

In other words, the hallmark of human intelligence is not narrow, calculating, and coldly logical rationality, but the synergistic integration of our feelings with our logic. So it seems that all the unemotional, yet still weirdly human, first-generation robots that populate Spielberg’s movie are simply impossible: without emotion, they simply couldn’t think or act like human beings at all.

Of course, AI is a parable and a fantasy, and perhaps we shouldn’t hold it to rigorous standards of scientific accuracy. But the movie, and its audiences’ generally uninformed reaction to it, reveal something larger about our societies. Just like the dapper lawyer who questioned me in Washington, most of us no longer have any idea where to find the line between fact and fantasy, between what is scientifically plausible and what is scientific nonsense. In this hyper-technological age, where so many things, perhaps even our survival, depend upon subtle decisions by a scientifically informed citizenry, that ignorance is deeply alarming.

Movie poster for Steven Spielberg's "AI"

Topics

Other

Societal Collapse